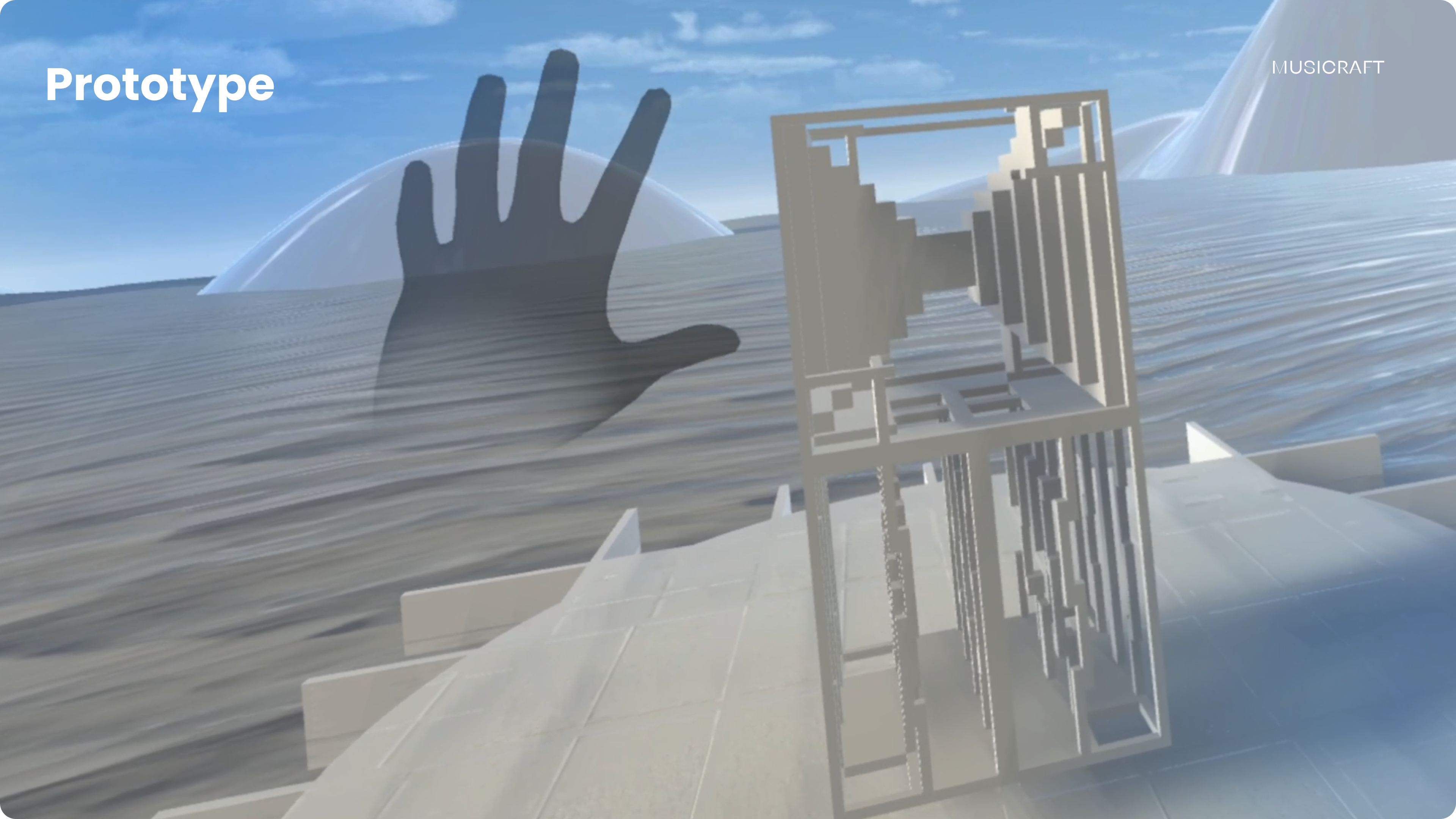

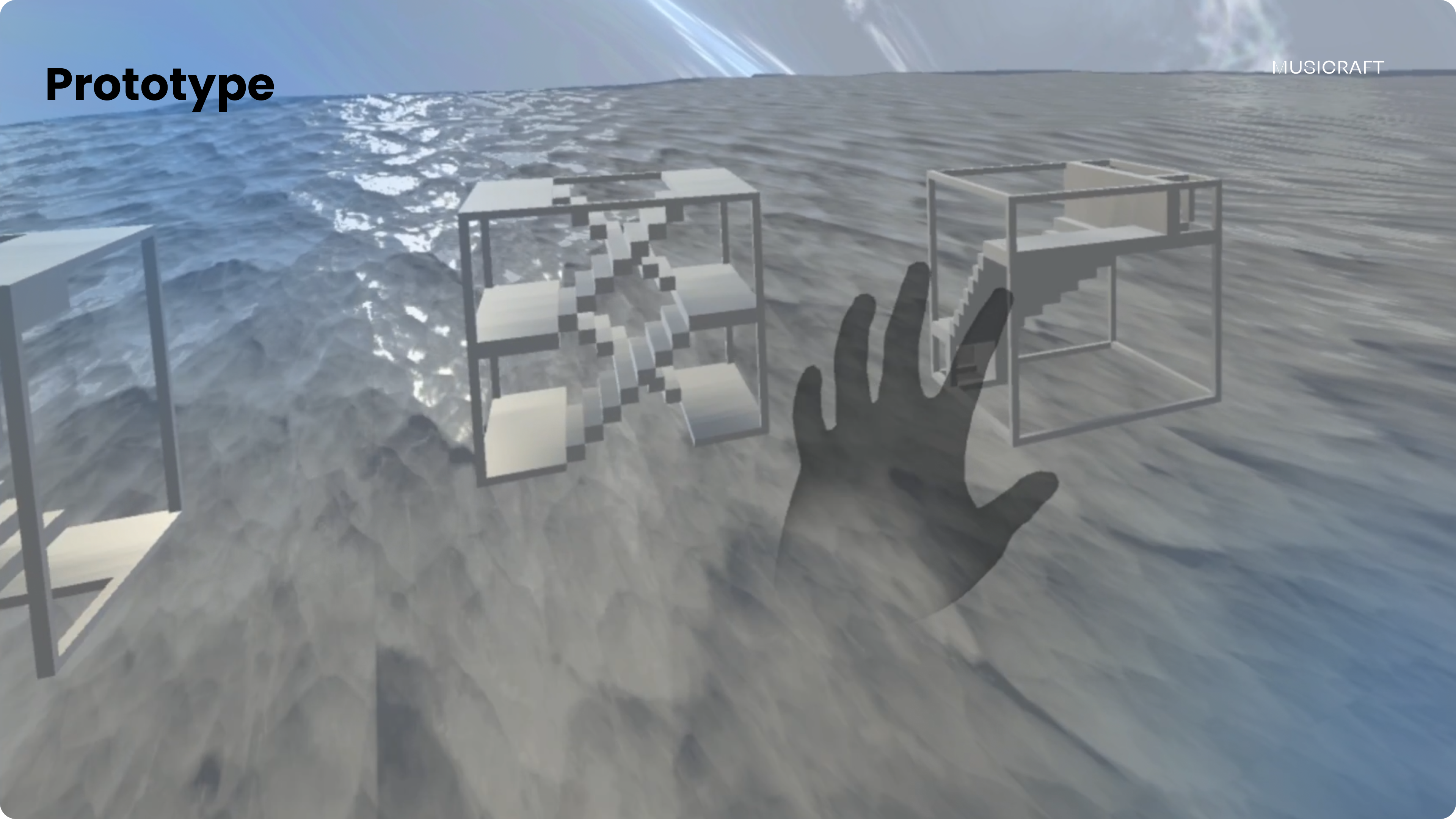

Musicraft by EMMM®

Aria Xiying Bao

3D Artist, UX Designer, Unity Developer

Nix Liu Xin

3D Artist

Emmanuel Serrano

Sound Designer

Yinghou Wang

Project Manager, 3D Artist

Davide Zhang

Unity Developer, Hardware Specialist

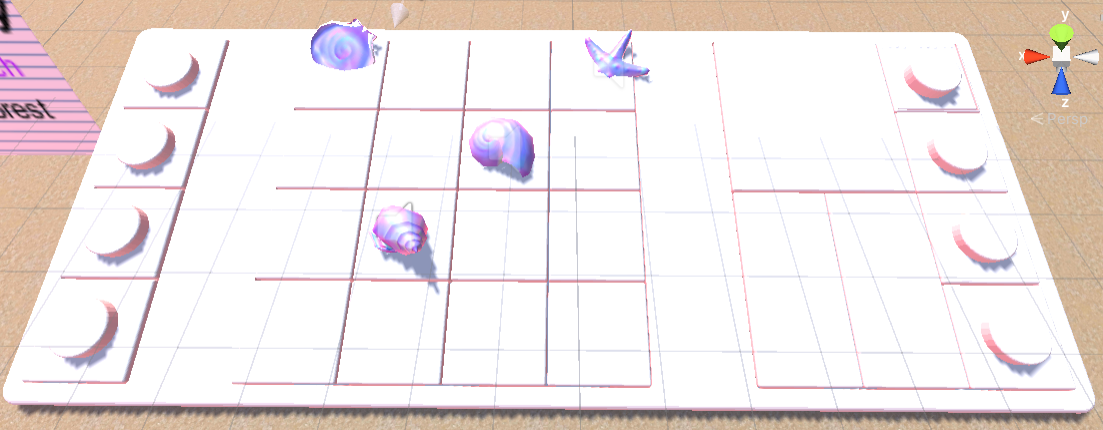

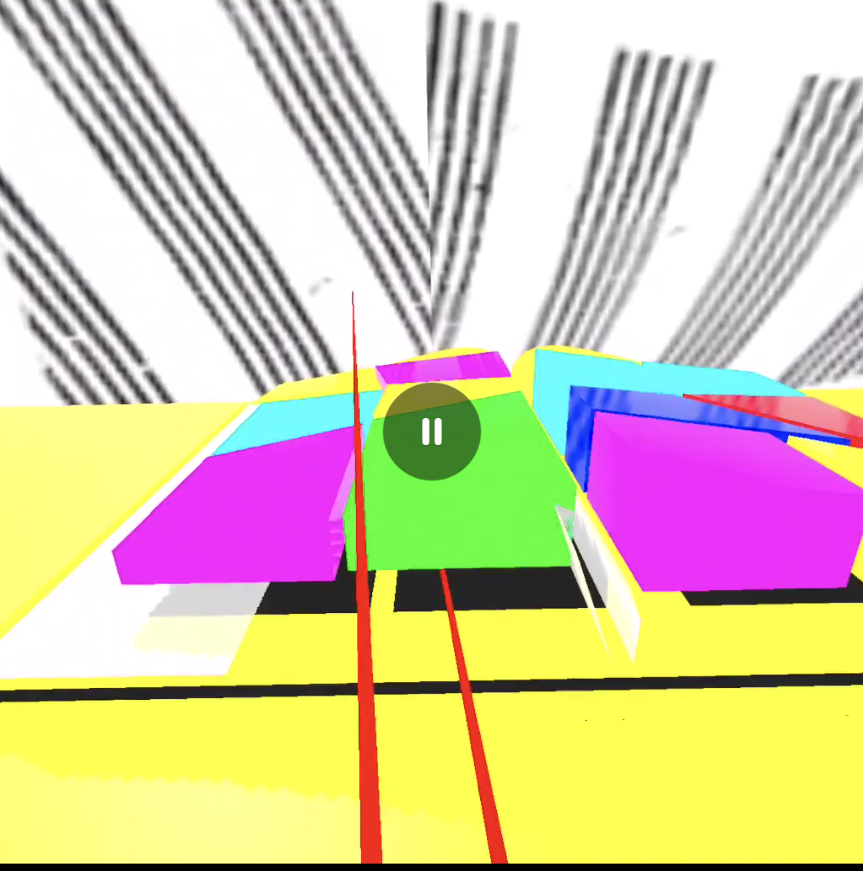

Musicraft is a VR audio-visual gaming experience and platform where

the user creates and remixes music and architecture at the same time.

It explores the idea that architecture is frozen music and music is

flowing architecture.

In this experience, users are able to generate their own large-scale

architecture by freely interacting with the blocks. Meanwhile, audio

is presented in a synaesthetic way. Different interaction choices of the user

leads to different visual output. Each music rhythm, respective building

block as a tableau, and the composing of which illustrates a dynamic,

non-linear, unpredictable, potentially infinite structure. Music, composition,

special effects—everything, down to an open experience allowing a non-musician,

non-3D builder, or non-visual artist to create their own piece.

We look forward to checking out users’ composition of music and architecture

through their constant imagination and experiments and uncovering what’s

possible in the remix of both in Musicraft.

APK link

Magic

Jessica Boye-Doe

Nia-Simone Egerton

Prim Rattanathumawat

Yubo Zhao

Jose Pescador

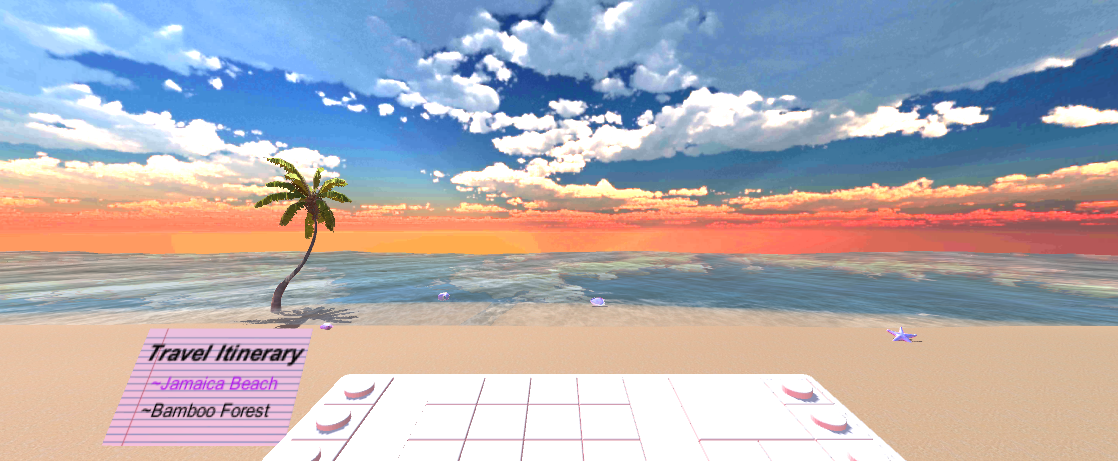

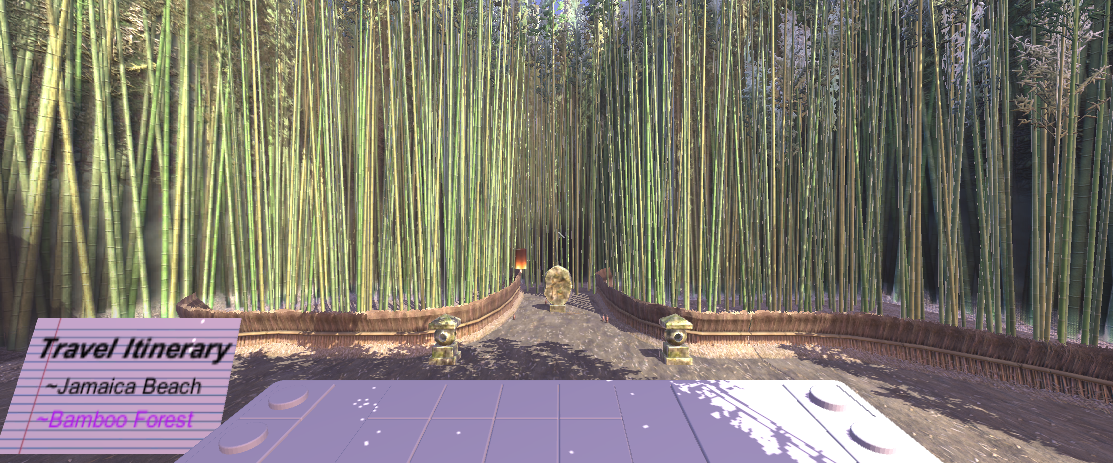

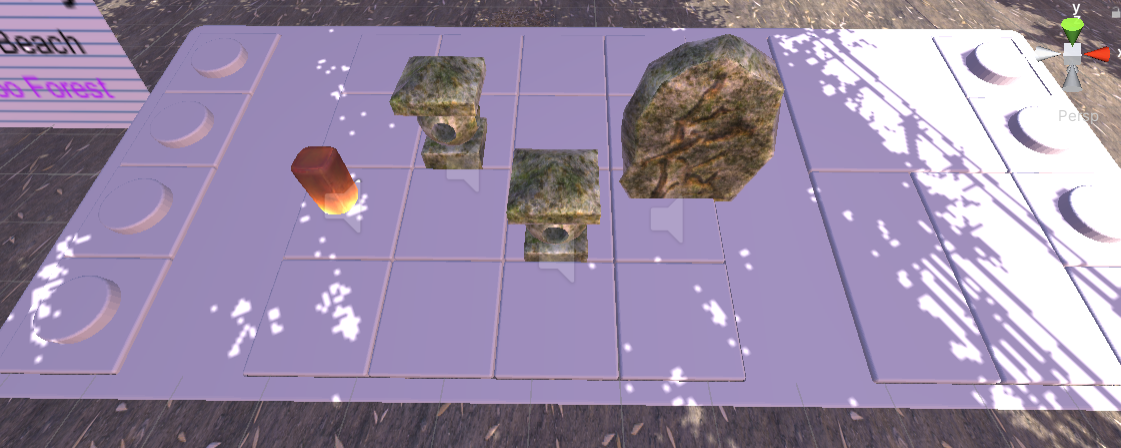

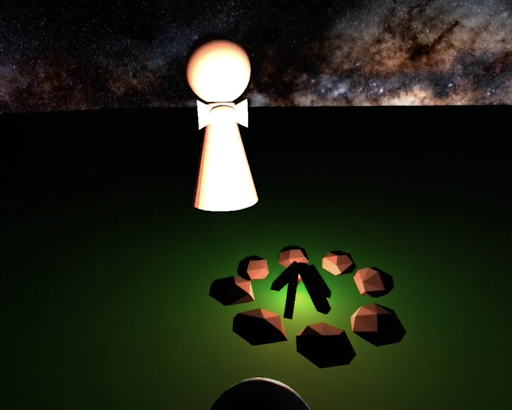

For our Final project our group decided to create a sequencer that

interacted with the music inside of the environment. Along with playing

around with sounds inside of our world, we wanted our user to get a taste

of what it is like to travel across the globe. Inside of our project,

the user will be introduced to different environments and music.

In turn the player will be invited to play around with the objects they see.

Our goal is for the user to create music with the items in front of them.

We want our player to leave the experience with a feeling of satisfaction

and an intrigue to continue exploring parts of the world and composition.

APK link

StageFright

Ilknur Aspir

Unity Implementation, Anxiety Intervention

Lancelot Blanchard

User Study, Programming

Ingrid Chan

Literature Review, Anxiety Intervention, Immersion

Lleyton Elliott

Hardware Specialist / Biometrics, Literature Review

Kevin Huang

Programming, Project Manager, Biometrics

Jenny Jiang

Immersion, Project Manager, Biometrics

Myron Layese

Sound Design, Biometrics, Programming

Per Pintaric

Aesthetics and UI Design, Immersion

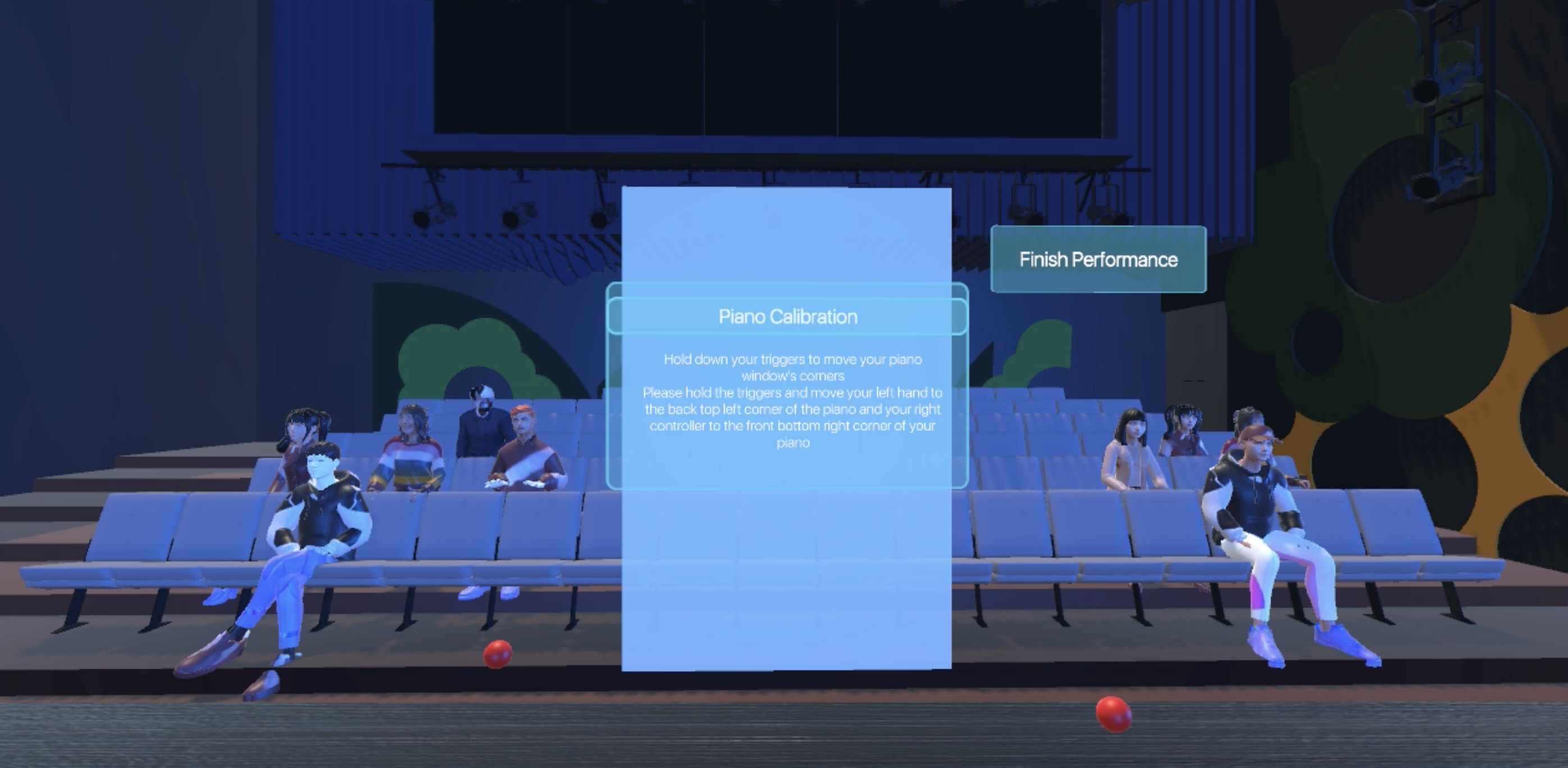

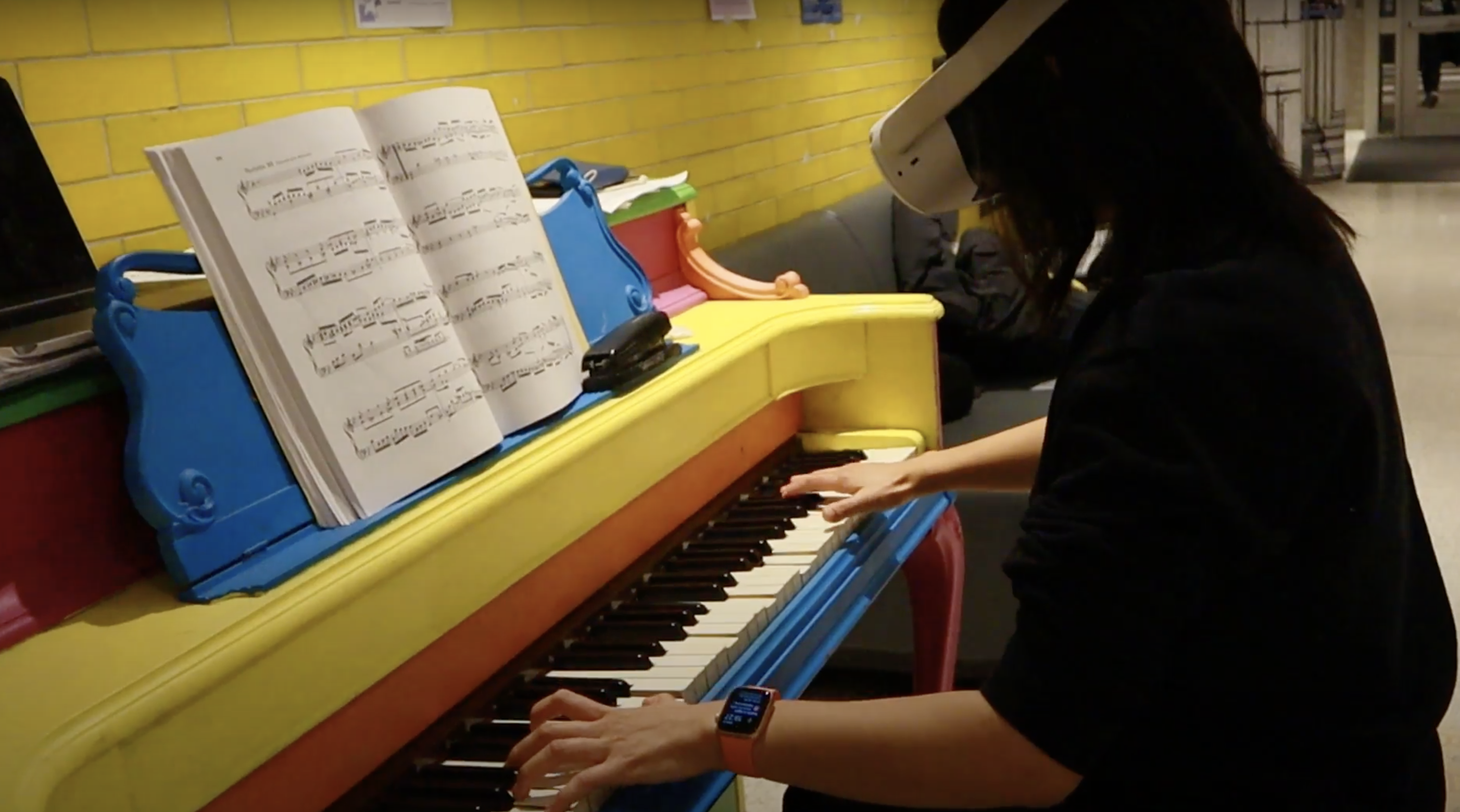

StageFright is a Mixed Reality app aiming to help performing musicians

deliver more confident and relaxed performances in person. It provides a

VR performance venue environment for the user to play their instrument in,

as well as a waiting room and backstage area to simulate the full experience

of preparing for a performance. The app offers helpful reminders and provides

research-based CBT (Cognitive Behavioral Therapy) techniques to help the user relax.

These are triggered by biometric sensors that detect change in the user’s sweat level.

The user experience is as follows:

(waiting room → backstage → performance stage → waiting room).

This process introduces and assists the musician with

1) getting comfortable rehearsing in the venue and

2) using biometric feedback to help the performer regulate their anxiety levels

through visual and auditory guidance.

APK link

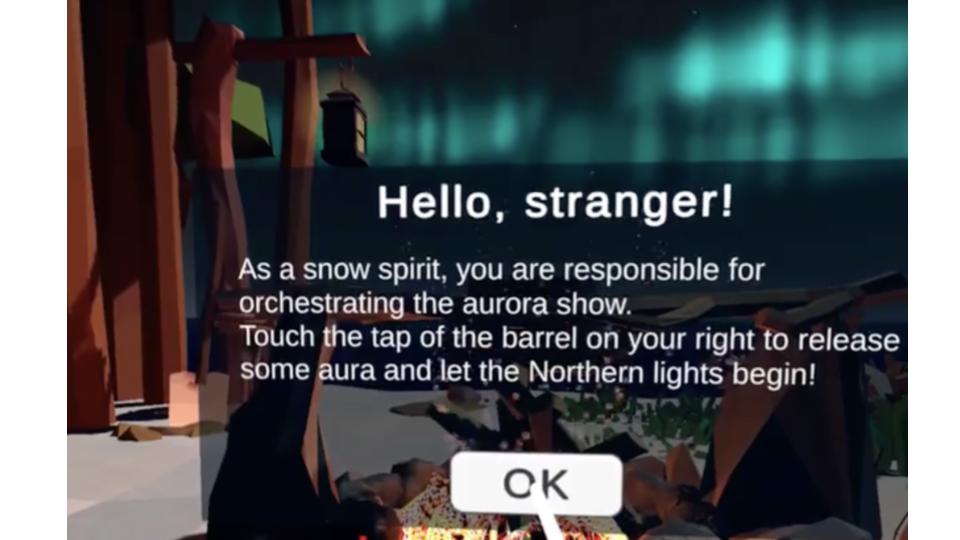

Aura

Kenny Lam

3D Level Design and Unity Programming

Mrinmoy Saha

3D Level Design and Unity Programming

Alexander Antaya

Project Manager and Audio Director/Composer

Cynthia Liu

Executive Audio Designer

“Aura” is a meditative, immersive VR experience that is (hopefully)

aesthetically pleasing and heart-warming. In the story, the protagonist

is a snow spirit, and collects aura (mystic particles) to orchestrate

an aurora show at night. The scene is an interactive experience

where the player/snow spirit ingests aura stored in a magic tank, in an

arctic setting. In the end, as the player ingests/interacts with the aura

coming out of the tank, they will orchestrate an aurora show in the night

sky with audio and visual elements.

APK link

Tone Soup

Lucy Nester

Lead Unity Developer, Instrument Design, Environment Design

Ari Davids

Sound and Music Design

Josh Kwok

Asset Design and Sound Effects

Peggy Yin

Conceptual/Narrative Design, Unity Developer, Environment Design

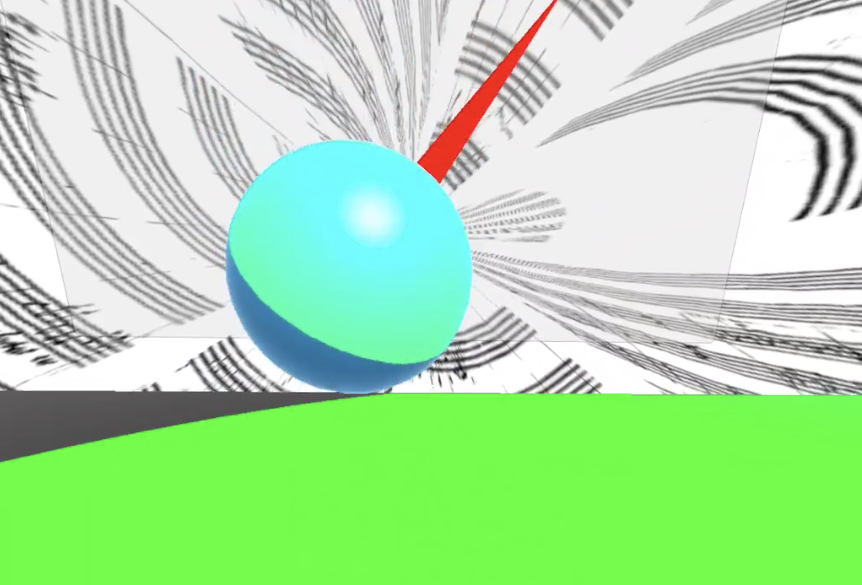

In virtual reality, music becomes technology: what we can hear and perceive as music is controlled

entirely by what we can electronically synthesize, produce, and generate. This opens up worlds of

possibility for the creation of new forms of music making, catalyzed by increased opportunities for radical

collaboration, experimentation with neurological and physical laws, and a soundscape that has yet to be

defined. Our project, Tone Soup, introduces an intuitive gestural interface to foster this sort of intense

musical exchange and experimentation. Through collective user input, our VR experience will help

compose the “folk music” of the metaverse from folk narratives of what it means for music—and

technology—to be live.

APK link

Ken Zolot is a Senior Lecturer in the department of Mechanical Engineering at MIT,

and a Professor of Creative Entrepreneurship at The Berklee College of Music.

His other work can be found at http://www.mit.edu/people/zolot

Ken Zolot is a Senior Lecturer in the department of Mechanical Engineering at MIT,

and a Professor of Creative Entrepreneurship at The Berklee College of Music.

His other work can be found at http://www.mit.edu/people/zolot

Aubrey is a Unity developer and VR interaction designer. He holds an MS from

the MIT Media Lab, where he wrote the thesis

An Integrated System for Interaction in Virtual Environments

as a memeber of the Fluid Interfaces Group,

and BAs from Wellesley College in Political Science and Media Arts and Sciences.

His other work can be found at aubreysimonson.com.

He also made this website :)

Aubrey is a Unity developer and VR interaction designer. He holds an MS from

the MIT Media Lab, where he wrote the thesis

An Integrated System for Interaction in Virtual Environments

as a memeber of the Fluid Interfaces Group,

and BAs from Wellesley College in Political Science and Media Arts and Sciences.

His other work can be found at aubreysimonson.com.

He also made this website :)

Mateo is an Ecuadorian developer and sound artist and recent graduate of

Berklee College of Music. His research focuses on exploring

computer code as an expressive medium, procedural audio, extended reality,

psychoacoustics, and human-computer interaction. His other work can be found

at mateolarreaferro.com

Mateo is an Ecuadorian developer and sound artist and recent graduate of

Berklee College of Music. His research focuses on exploring

computer code as an expressive medium, procedural audio, extended reality,

psychoacoustics, and human-computer interaction. His other work can be found

at mateolarreaferro.com

Rob Jaczko is an independent recording engineer and record producer,

and Chair, Music Production and Engineering Dept. at Berklee College of Music.

He is a former staff engineer at A&M Studios, Hollywood, California, where

his engineering credits include Aerosmith, Vinnie Colaiuta, Sheryl Crow, Crowded House, and Hall and Oates.

He is also the founder of View Works, specializing in stereoscopic 3D imaging and immersive audio solutions.

Rob Jaczko is an independent recording engineer and record producer,

and Chair, Music Production and Engineering Dept. at Berklee College of Music.

He is a former staff engineer at A&M Studios, Hollywood, California, where

his engineering credits include Aerosmith, Vinnie Colaiuta, Sheryl Crow, Crowded House, and Hall and Oates.

He is also the founder of View Works, specializing in stereoscopic 3D imaging and immersive audio solutions.